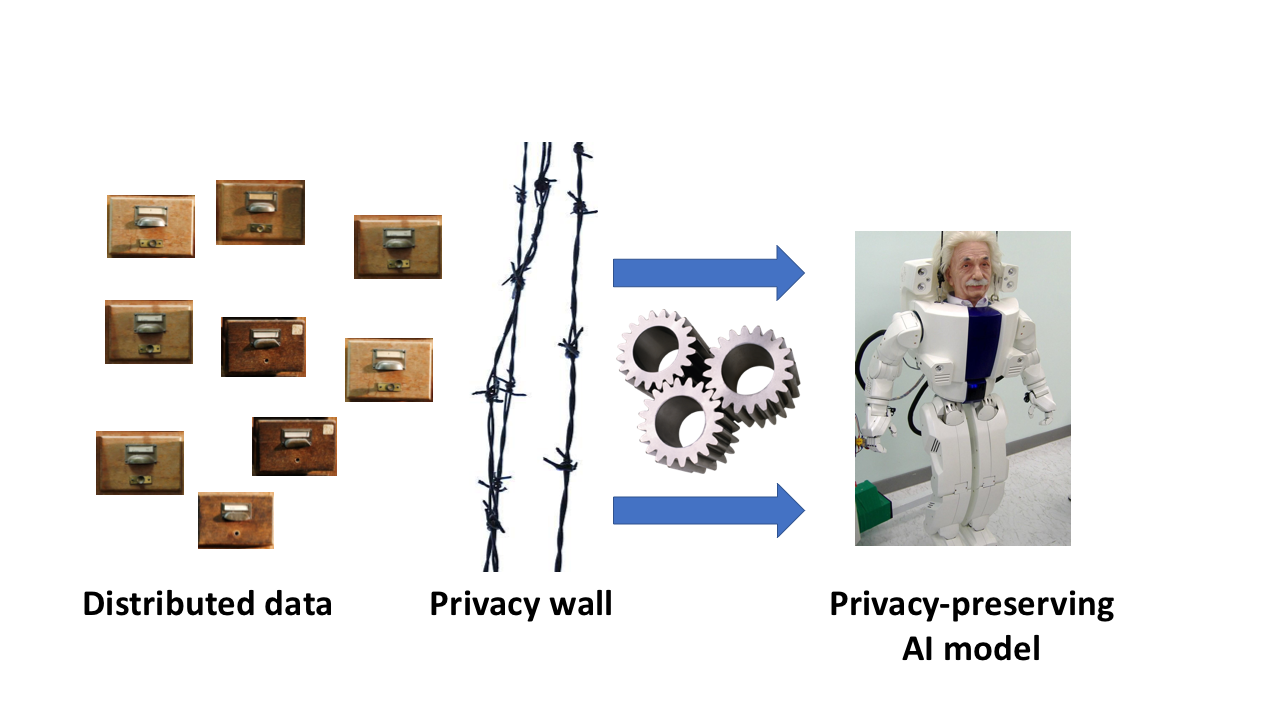

A new method developed by FCAI researchers of University of Helsinki and Aalto University together with Waseda University of Tokyo can use, for example, data distributed on cell phones while guaranteeing data subject privacy.

Modern AI is based on learning from data, and in many applications using data of health and behaviour the data are private and need protection.

Machine learning needs security and privacy: both the data used for learning and the resulting model can leak sensitive information.

Based on the concept of differential privacy, the method guarantees that the published model or result can reveal only limited information on each data subject while avoiding the risks inherent in centralised data.

In the new method, using distributed data avoids the risks of centralized data processing, and the model is learned under strict privacy protection.

Privacy-aware machine learning is one key in tackling data scarcity and dependability, both identified by FCAI as major bottlenecks for wider adoption of AI. Strong privacy protection encourages people to trust their data with machine learners without having to worry about negative consequences as a result of their participation.

The method was published and presented in December in the annual premiere machine learning conference NIPS: https://papers.nips.cc/paper/6915-differentially-private-bayesian-learning-on-distributed-data.

FCAI researchers involved in the work: Mikko Heikkilä, Eemil Lagerspetz, Sasu Tarkoma, Samuel Kaski, and Antti Honkela.